Xabier Barandiaran1

xabier@barandiaran.net

http://barandiaran.net

26-06-03

In complex adaptive systems, where internal and external non-linear interactions give rise to an emergent functionality, analytic decomposition of component and isolated functional evaluation of them is not a viable methodological practice. More recently, embodied bottom-up synthetic methodological approaches have been proposed to solve this problem. Evolutionary simulation modelling (specifically evolutionary robotics) provides an explicit research methodology in this direction. We argue and illustrate that the scientific relevance of such methodology can be best understood in terms of a double conceptual blending: i) a conceptual blending between structural and functional levels of description embedded in the simulation; and ii) a methodological blending between empirical and theoretical work in scientific research. Simulation models show their scientific value on: reconceptualization of theoretical assumptions; hypothesis generation and proof of concept. We conclude that simulation models are capable of extending our cognitive and epistemological resources to (re)conceptualise scientific domains and to establish causal relations between different levels of description.

Complexity and evolutionary simulation models

in cognitive science. Epistemology of synthetic bottom-up

simulation modelling. v.1.0

Copyright © 2003 Xabier Barandiaran.

Copyleft 2003 Xabier Barandiaran:

The licensor permits others to copy, distribute, display, and perform the work. In return, licensees must give the original author credit. The licensor permits others to copy, distribute, display, and perform the work. In return, licensees may not use the work for commercial purposes --unless they get the licensor's permission. The licensor permits others to distribute derivative works only under a license identical to the one that governs the licensor's work.

This is not mean to be the full license but short guide of it. The full license can be found at:

http://creativecommons.org/licenses/by-nc-sa/1.0/legalcode

| v.1.0 | 26-06-03 |

Xabier Barandiaran (2003) Complexity and evolutionary simulation models

in cognitive science. Epistemology of synthetic bottom-up

simulation modelling. v.1.0. url:

http://sindominio.net/~xabier/textos/blending/blending.pdf

The Cartesian method of divide and conqueer, the decomposition of a system in components and their isolated analysis has long been the mainstream methodological strategy of scientific enquiry on the understanding of functional systems, i.e. mechanistic explanations.

In the field of cognitive science computational functionalism has proceed by what Bechtel and Richardson (1993) call a synthetic top-down decompositional method, i.e. decomposing functional or task related cognitive structures (perception, memory, reasoning, action, etc.) dividing them into sub-components, and establishing a set of computational relations among subsystems.

On the other hand neuroscientific research has focused on neurophysiological decomposition of neural structures and localisation of such functional cognitive components: an analytic bottom-up approach.

We shall understand localisation (the main mechanistic explanatory strategy) as a mapping between a physical structure (operationally tractable set of variables, whether they are biochemical or neurodynamic) and a functional structure (a set of computational components). Advances on neurophysiology and computational functionalism shall, in turn, end up providing us with such mechanistic explanation if: a) we don't want to assume a metaphysical dualism, and b) computational-functionalist interpretations of cognitive behaviour are to be considered the `right' functional interpretation among all the possible ones.

The problem arises when recent interest on complex systems has shown that such methodology (decomposition and localisation) fails to understand nonlinear systems Langton (1996). When the interactions between components are non-linear the principle of aggregation or localisation presupposed in traditional decompositional methods does not hold. The system is more than the sum of its parts, the superposition of isolated components does not give rise to the essential properties of the whole system. It is the non-linear interaction between components what determines the properties of the system creating a kind of structural complexity where the relation between function and structure cannot be established by the traditional decompositional method; i.e. no mapping can be established between functional components and structural (neurophysiological) components.

In addition to this structural complexity we have an interactive complexity where the system interacts with its environment in such a way that the overall functionality of the system emerges from highly interactive loops. But things can go even worst on complexity, as Harvey et al. (1997) put it: ``Interactions between separate sub-systems are not limited to directly visible connecting links between them, but also include interactions mediated via the environment'' (p.205).

As Clark (1996,1997) has pointed out the nature of what he calls interactive emergence seriously compromises the classical computationalist definition of function by:

Thus in complex adaptive systems where internal and external nonlinear interactions give rise to an emergent functionality top-down functional or task decomposition and their structural localisation is not a viable methodological practice. In mathematical terms structural complexity is a consequence of the impossibility of the analytic solution for the nonlinear differential equations determining system behaviour and the high sensitivity of the system to boundary conditions (when it exploit particular features of the environment to achieve functionality) or the opposite, the metaestability of the system under structural perturbations, i.e. its self-regulating capacity.

In the realm of cognitive science both aspects of emergent functionality (the structural and interactive) gave rise to two different radical modification of the orthodox functionalist-computationalist research program (Block, 1996; Fodor, 1987). Concerning structural emergence the PDP approach showed that cognitive processing was not the output of sequential symbol manipulation procedures but the outcome of highly distributed sub-symbolic networks. The interactive complexity has been highlighted by the more recent embodied and situated approach to cognition (Brooks, 1991b,a; Pfeifer and Scheier, 1999).

What embodiment and situatedness illustrates is that the way the specific adaptive function is achieved involves a dynamic coupling between agent and environment where no structure of the agent can be pointed to be sufficient for the function to happen. We can contrast this embodied and situated functionality, what Luc Steels has called emergent functionality (Steels, 1991), as opposed to hierarchical systems. Hierarchical systems are those where the system can be decomposed into different components which perform isolated functions by directly controlling the variables defining the function, i.e. the structure of the mechanism and the function it performs are codefined, localisation is possible. An example of a hierarchical system is a motor engine where, for example, a valve that controls the flow of oil to an engine performs it function by directly manipulating the size of the gap through which the oil flows.

Bonabeau and Theraulaz (1995) show how

the manipulation of boundary conditions2not defining

the function itself play a fundamental role in the performance

of emergent functions. Given an environment

![]() and

the subset of environmental variables defining a function

and

the subset of environmental variables defining a function

![]() a function

is defined as

a function

is defined as

![]() .

An structure

.

An structure ![]() performs the function F iff:

performs the function F iff: ![]() .

What reductionists3 presuppose is that

.

What reductionists3 presuppose is that

![]() remains

constant, i.e.

remains

constant, i.e.

![]() for

for

![]() . In short: reductionists believe that the external variables of

those defining a function do not affect how a structure performs that

function. Embodiment and situatedness shows how agents exploit many

features of their body and environment (boundary conditions) to

perform functions which are not defined by those body/environment

features. In other words localisation (i.e. mapping between

structural and functional components) cannot succeed because the

system exploits interactive feed-back loops with environmental

features to satisfy functionality.

. In short: reductionists believe that the external variables of

those defining a function do not affect how a structure performs that

function. Embodiment and situatedness shows how agents exploit many

features of their body and environment (boundary conditions) to

perform functions which are not defined by those body/environment

features. In other words localisation (i.e. mapping between

structural and functional components) cannot succeed because the

system exploits interactive feed-back loops with environmental

features to satisfy functionality.

The way some adaptive systems exploit several environmental features to perform functions and the highly interconnected and recursive nature of the causal network of their internal structure does not allow for the traditional analytic methods to be successfully aplied. Under this situation new methodologies has been proposed as suitable tools to explore complex systems: what we will call synthetic bottom-up simulation modelling.

During the late 80s and early 90s a new methodological paradigm for the study of complex adaptive systems came to being. Alife (Bedau, 2001; Langton, 1996) and situated robotics proposed a bottom-up, situated and synthetic approach toward the modelling of complex systems.

The approach is synthetic because understanding of a systems is expected to be achieved by building similar systems; i.e. by synthesis rather than analysis. The approach is bottom-up because the functionality of the system is achieved as emergent from structural local rules and local system environment interactions rather than functional components and informational input-output relations between them. Repeated and distributed local interactions give rise to a global pattern of system behaviour, it is in this global pattern that functionality is found and not in the local components of the system; i.e. there is no mapping between structural components and functional components. There is no explicit encoding of the global behaviour. Finally the approach is situated: systems are built in real or simulated environments with direct sensory-motor links (i.e. input-output relationships are not symbol based). This methodology is the core of Alife techniques (Bonabeau and Theraulaz, 1995; Bedau, 2001; Langton, 1996) and embodied and situated robotics (Brooks, 1991b,a; Pfeifer and Scheier, 1999), among others. In order to understand the scientific value of such methodology we shall focus on a well established and successful specific methodology which is that of evolutionary robotics (Nolfi and Floreano, 2000; Harvey et al., 1997; Husbands et al., 1997) and Randall Beer's minimally cognitive behaviour program (Slocum et al., 2000; Beer, 2001).

Since structural decomposition of a complex system fails to grasp the essential local interactions that give rise to functional behaviour synthesis looks like a natural way to deal with such a problem, it is on the synthesis that knowledge is achieved, on the manipulation of parameters and local rules while putting together the components of the system. But the very nature of complex systems makes its synthesis a problematic issue, that's precisely the locus of complexity and, unlike functionalist top-down synthesis, complex systems are not manageable for human understanding4. As a solution to this problem artificial evolution has been widely used to synthetize a functional system. A particular case of this technique is given by evolutionary robotics and the minimally cognitive behaviour program.

Evolutionary robotics and evolutionary simulation models have successfully been applied to achieve a number of complex behaviours, among them: plastic development (Floreano and Urzelai, 2000,2001), robot team coordination and role allocation (Quinn et al., 2002), communication (Quinn, 2001), shape recognition (Cliff et al., 1993) , pursuit and evasion (Cliff and Miller, 1995), acoustic coordination (Di Paolo, 2000a), learning (Tuci et al., 2002), adaptation to sensory inversion and other sensorimotor disruptions (Di Paolo, 2000b), active categorical perception (Beer, 2001), short-term memory, self-nonself discrimination, selective attention, attention switching, anticipation of object movement (Slocum et al., 2000; Gallagher and Beer, 1999; Beer, 1996), etc...

Evolutionary simulation model synthesis proceeds as follows:

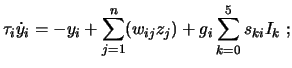

Body and environment can be real or simulated, in the last case khepera like robots are usually simulated (i.e. circular two dimensional robots with two wheels and different sensors) and the resulting controll architecture exported to the real robot (not without problems (Jakobi et al., 1995)). What is interesting for our discussion is the structure of the neural controll architecture. The basic structure of the neural network is generally a Continuous Time Recurrent Neural Network CTRNN (which are in principle capable of the dynamical behaviour of any other dynamical system with a finite number of variables (Funakashi and Nakamura, 1993)). CTRNNs are fully connected, recursive, dynamic (time and rate dependant), controll architectures specified by the following state equation:

|

(1) |

whereis the state of each neuron,

is the time constant (decay constant for neural activity),

is the connection weight between neuron

and

,

is the activation of neuron

,

is

's state and

a bias term;

is a gain applied to the overall sensory input to the neuron,

is the input weight from sensor

to neuron

and

is the input value of sensor

. States are initialized at 0 or a random value and the CTRNN is integrated using forward Euler method. All neurons are connected to each other and to themselves.

Over this basic architecture more complex mechanisms can be implemented, such as gas-nets (Husbands et al., 1998) and synaptic plasticity (Floreano and Urzelai, 2001; Di Paolo, 2000b).

Controll parameters (plastic rules, time constants, number of neurons, weight values, etc...) are left to evolutionary search as well as some body-sensor parameters (motor transfer parameters, position of sensors, etc. -depending on the particular case). The structure of the simulation is thus defined as a dynamical system and is implemented in a simulation model where the state of the system is numerically calculated for short time-steps.

After deciding the basic structure of the simulation a genetic algorithm is used to evolve the parameters with the following procedure:

In brief what we get is a simulation model were local interaction rules (achieved determining parameter values through evolution), recursively (through the numerical calculation of states) applied give rise to a global system behaviour (specified by the evolutionary fitness function). The question now is how does this modelling technique contribute to scientific development once decomposition and localisation are shown to be unapropiate methods?

The role of bottom-up synthetic simulation models (and more specifically evolutionary robotic simulations) is, we will argue, that of providing: a) a conceptual blending between lower level mechanisms and global behavioural patterns and b) a methodological blending between empirical and theoretical domains. We take the notion of blending (and conceptual blending in particular) from Fauconnier and Turner (1998) who analyse conceptual blending as a major cognitive process in which projections from two different conceptual spaces blend into a single conceptual space on which new relations and structures are discovered, which feed-back to the input spaces thus producing new knowledge.

The problem of functional decomposition explained above has been skipped in traditional scientific practices by dividing natural objects of study on different levels of description and finding specific observables on each level and lawfull regularities among those observables. This way neurophysiological and behavioural or cognitive levels of observation become two separated scientific domains. The problem arises when localisation of functional (cognitive) components into structural components fails as a result of the underlying complexity of the system.

In such cases we believe that simulation models act as computational and exteriorized conceptual blenders, where two distinct conceptual spaces, structural (neurodynamical) and behavioural (cognitive), merge into the simulation, feeding back to both input domains. Simulation models in evolutionary robotics are not neurobiological models (in fact they are generally very poor in comparison with computational neuroscientific models of neurons), nor they are purely cognitive models (which are often build in purely functional or representational terms) but conceptual blender between both functional and neurobiological models.

At a first view it could be argued that the blended space being artefactual doesn't satisfy Fauconnier and Turner's theory which presupposes that the blended space is a mental space and that cognitive operations on that mental space are the source of new knowledge. Nontheless, carefully analysed, simulation models do in fact satisfy most (if not all) of the characteristics of mental blending spaces. The neurophysiological input space projects into the blended through the abstraction of local rules from neurophysiological models. The cognitive input space projects by conceptualising the emergent behavioural pattern as non trivially cognitive; i.e. the emergent global behaviour is considered a cognitive behaviour. What remains opaque (until the experimenter abstracts explanatory patterns) is the cross-pace mapping, because of the dynamical complexity of the emergent phenomena. In the case of evolutionary robotics artificial evolution is used to create the blended space, and numerical calculations to run the blended space which is not purely relational but dynamical.

The main difference between mental and artifactual blended spaces is not its position in relation to the skull (after all cognitive capacities are well understood as being distributed and also extracraneal Clark and Chalmers (1998)) but the capacity of computational simulation models to solve differential equations numerically and implement massive and recursive computations to produce emergent simulation models; human manipulation, transformation and experimentation with the computational model works as well as it does with mental models.

After deciding what evolutionary simulation models are the question now is to understand what is their scientific value if they aren't models of biological phenomena nor models of cognitive functionality; if they don't even try to fit any empirical data (Di Paolo et al., 2000).

We believe that simulation models are better understood as methodological blenders (this time in a loose sense of blending only metaphorically related to Fauconnier and Turner's work) between purely empírical and purely theoretical domains (whether this extreme positions exist or not, as such, is not an issue here). Specially significant is the relation simulation models establish between theoretical assumptions (adaptationism, representation, innateness, etc.) and empirical models.

Di Paolo et al. (2000) argue that simulation models work as opaque thought experiments halfway between empirical models (in virtue of their capacity to produce non-trivial data through the computational emergence of global patterns) and theoretical tools (since they address abstract theoretical/conceptual issues rather than specific empirical targets, unlike biorobotic models (Webb, 2001)). Following their argument we believe that simulation models show a scientific value on:

The scientific value of computational simulation models can be understood, from a higher perspective, as diminishing the constraints acting upon scientific development, as defined by Bechtel and Richardson (1993). In relation to psychological constraints it shall be clear by now that simulation models extend human capacities providing a kind of externalized and computationally powerfull conceptual space in silico. At the same time the way in which human understanding is constrained on big search spaces is now been solved by artificial evolution as a genuine tool to explore search spaces (Mitchell, 1996). Thus there are, at least, two ways in which human psychological capacities are enhanced with simulation models (other than the classical memory capacity, computational power, and speed): a) by conceiving dynamical objects composed of highly interacting components, and b) by exploring search spaces with artificial evolution. On the side of phenomenological constraints we believe that simulation models come to produce new artificial phenomena which can be studied (and often are) in their own right, and in a scientifically relevant way when considered as artifactual phenomena blending distinct modelling (scientific) spaces. It is possibly in relation to operational constraints where simulation models have being traditionally considered to have a major contribution, on that simulation models provide to the experimenter a complete control over variables, and repeatability under different conditions. Finally physicall constraints are considered by Bechtel and Richardson (1993) as limiting the range of allowed component functions by the requirement that they must be shown to depend systematically on physical structures. Once again we believe that evolutionary simulation models provide one of the most powerfull cognitive tools to explore the systematic dependencies between lower level mechanistic (physical) constraints and the produced emergent phenomena, acting themselves (when no other complexity reduction is possible --by extracting intermediate explanatory patterns) as explanations of bottom-up causation.

We conclude that simulation models are capable of extending our cognitive and epistemological resources to (re)conceptualise scientific domains and to establish causal relations between different levels of description. Simulation models become, blended with traditional empirical methodology, crucial tools for the scientific research on complex systems and cognitive science.